Rendering analysis - Cyberpunk 2077

(Spoilers-free post!)

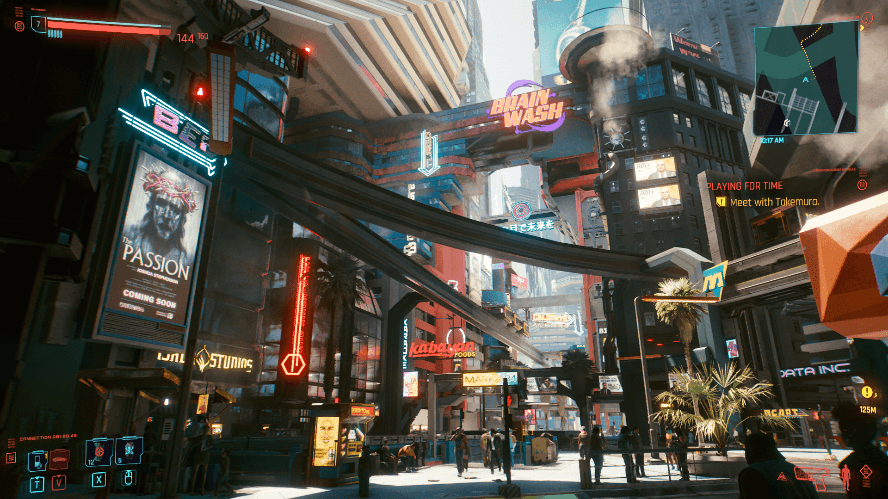

Quite a lot of people I know inside or outside this industry are playing the long-waited “gargantuan maelstrom” now. After a couple few hours immersed around the stunning view (and bugs of course) in the Night City, I was wondering how one frame is rendered in Cyberpunk 2077 (every graphic programmer’s instincts!). So I opened RenderDoc and PIX, luckily REDengine didn’t behave unfriendly like some other triple-that (yes Watch Dogs 2 I mean you), I got a frame capture without any problems. (Disclaimer: I’m pretty sure later others like Alain Galvan, Anton Schreiner, Adrian Courrèges or even guys from CDPR would bring us a more detailed and accurate demonstration about how things works, I’m just chilling out around and welcome for any discussions and corrections! )

Overview

-

I’m running the game in Ultra configs in 1080p without ray-tracing and DLSS enabled, for the sake of my years “old” GTX1070

-

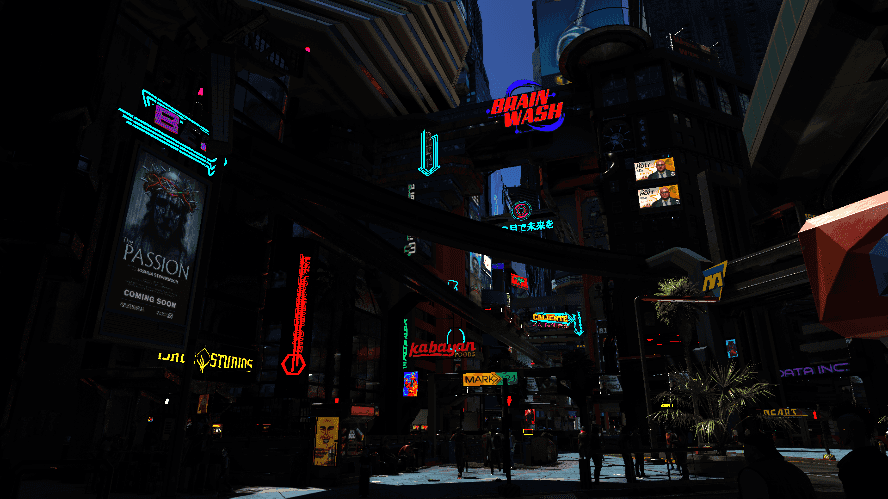

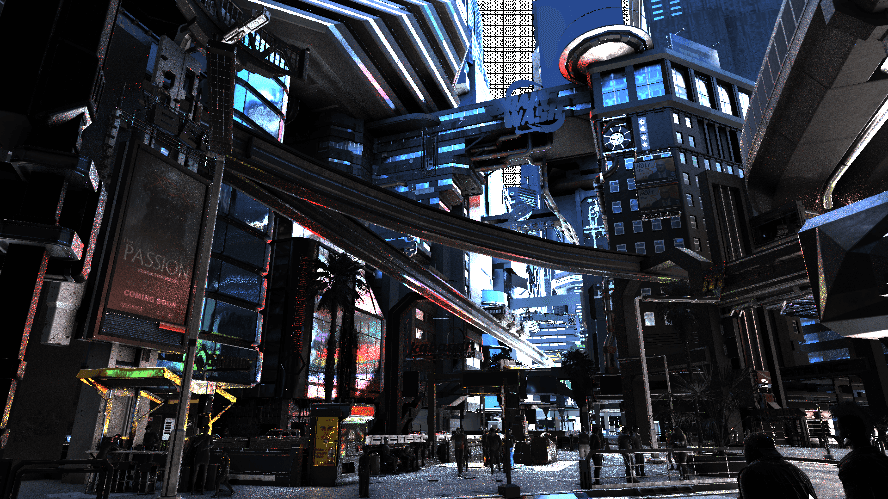

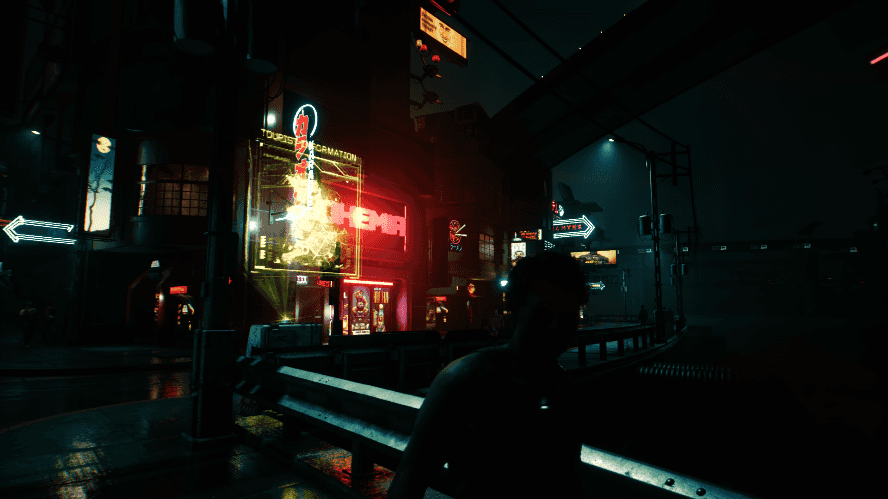

Two captured final results, one is around the main character’s apartment at day (let’s name it main street) and another one is around some river bank at night (Nobody dislikes eye candy!):

- Some pictures’ color is manually clamped to easier visualize

- It’s a DirectX 12-only game on Windows (if they started the development around 2013 or slightly earlier, I’m curious that how much effort it costs to upgrade the engine)

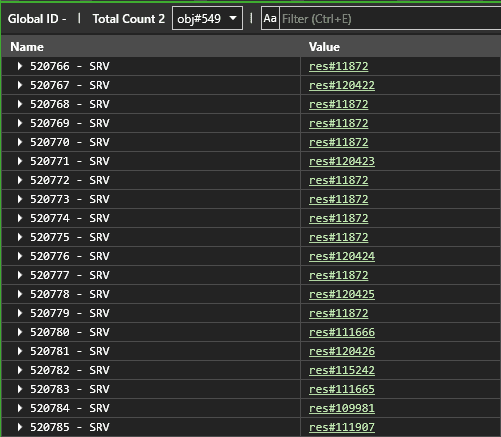

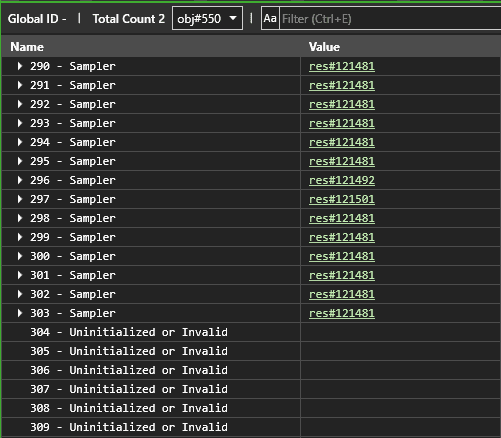

- There are 2 Descriptor Heaps allocated just, one for half million CBV-SRV-UAVs and another for 2k~ Samplers

-

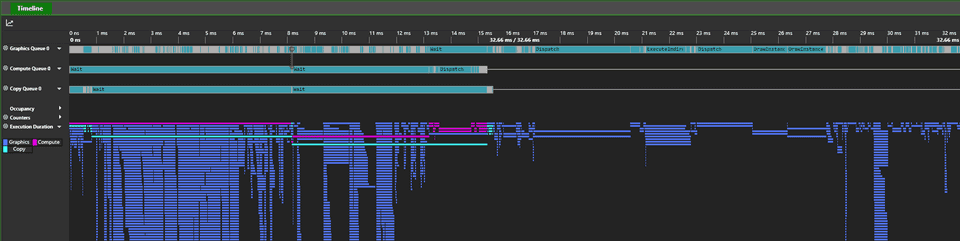

There is one Graphic queue, one Compute queue, and one Copy queue, the GPU work balance is quite even

-

ID3D12GraphicsCommandList::CopyBufferRegionis frequently called between passes to copy RT results -

Quite a lot

D3D12_RESOURCE_FLAGS::ALLOW_UNORDERED_ACCESSremoval recommendation was thrown out by PIX, not sure if it’s really just my capture occasionally didn’t use some resources or, they do need some optimizations (I had experiences that unordered access was quite hurting sometimes) -

Frame Timeline: Intensive at some early geometry stages, then deferred works kick in

Pre-geometry passes

Billboard and GUI

-

The entire frame starts by preparing the resources for the in-game billboards and screens

-

First few copy operations executed on 3 textures: The destination textures’ format is

DXGI_FORMAT_R8_UNORM, the first is 480x272 and another two are at half size, the source texture should be a mega texture which is the destination of lots later copy operations. (Question: what’s the purpose for these copies? In the night scene it doesn’t have these operations, is it related to streaming?) -

One of the three very first copied textures:

- Next pass they are used as the shader input:

-

Billboards are drawn to one texture where all mips tightly packaged together

-

Billboards:

-

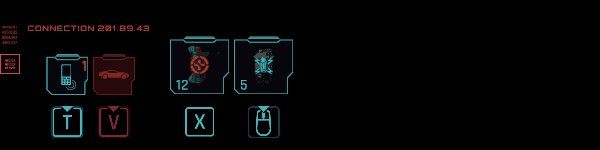

Then the elements for the cyberish-GUI are drawn and also copied to the mega texture

-

Part of the GUI elements:

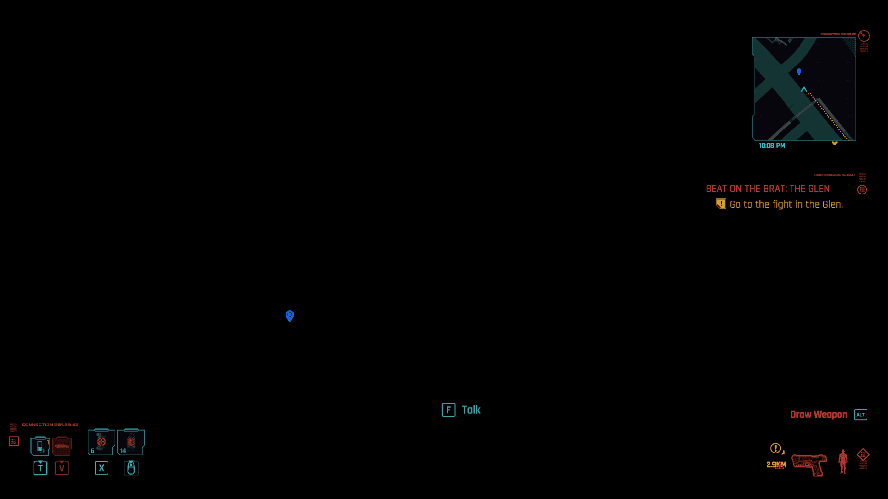

Sky visibility/top-view shadow/mini map

- The RT of the next pass is a single

D16_UNORM2k texture, around 400~ instanced draw calls executed in the captured scene (not sure how this RT later used)

Unknown compute pass 01

-

There are 2 dispatches over a 64x64x64

R16G16B16A16_FLOAT3D texture in this pass (looks like voxelized normal/position?). The thread group count at the first time is 2x2x64, the second time it’s 16x16x16 (the RT is used later multiple times in terrain pass, ocean wave pass, depth-pre pass, and base material pass, definitely something crucial for geometry information) -

Some slices of the 3D Normal:

Unknown compute pass 02

- 3 dispatches made up by 63x1x1, 3x1x1 then again 63x1x1 thread groups (in the night scene capture it’s 38x1x1, 3x1x1 and 38x1x1), couple-few

ByteAddressBufferandStructuredBufferare bound as UAV (all of them are used later in the base material pass)

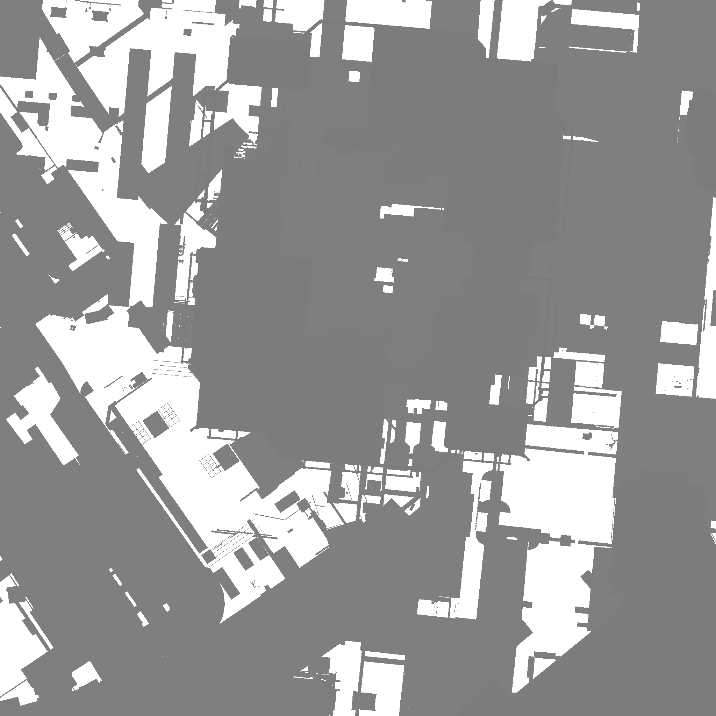

Terrain

-

1

R8G8B8A8_UNORM2 slices 2D texture array and 1R16_UINT2D texture are drawn in 1k resolution (looks like they are chunks of terrain) -

2 draw calls and RTs:

Unknown color pass 01

- 2 draw calls issued (only at the main street capture) but nothing is drawn due to the clip (and the meshes are barely comprehensible, looks like they are 2 levels of a LOD-ed mesh), RT is 1 256x256

R16_UINT2D texture

Ocean wave

-

The top-view ocean map mask and a generated wave noise texture are bound as input, RT format also changes to

R16_UNORM, no tessellation shader stage, some regular grid plate meshes are used to directly draw different chunks -

Ocean wave noise in

R16G16B16A16_FLOATand mask inBC7_SRGB:

:

Geometry passes

Depth-pre pass

-

The RT is in

D32_S8_TYPELESSformat, surely it would help to optimize later pixel-heavy works -

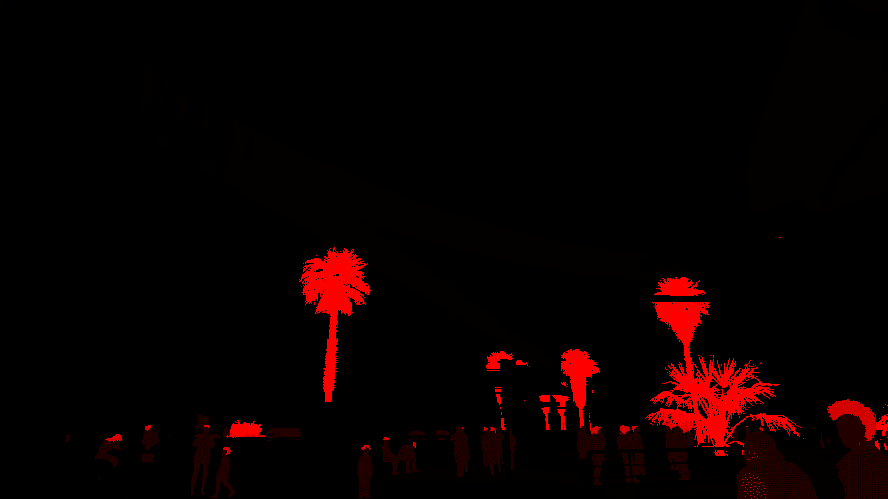

Depth-pre pass result:

Base material passes

- All vertex buffers uploaded to GPU memory are packed as SOA:

// VB0

nshort4 POSITION0;

// VB1

half2 TEXCOORD0;

// VB2

xint NORMAL0;

xint TANGENT0;

// VB3

unbyte4 COLOR0;

half2 TEXCOORD1;

// VB7

float4 INSTANCE_TRANSFORM0;

float4 INSTANCE_TRANSFORM1;

float4 INSTANCE_TRANSFORM2;-

In pixel shader sometimes an

R16G16B16A16_UNORM64x64 noise texture is bound as input, only RG channels contain data, similar to what we’d use in SSAO for random normal rotation or offset -

All material textures are accessed through the descriptor table

-

RT0 in

R10G10B10A2_UNORMis the albedo, alpha channel is used as object mask, animated meshes are marked as0x03 -

RT1 in

R10G10B10A2_UNORMis world-space normal packaged by offset -

RT2 in

R8G8B8A8_UNORMis metallic-roughness and other attributes, animated meshes are not rendered here, the alpha channel should be the transparent or emissive property for emissive material objects -

RT3 in

R16G16_FLOATis Screen-space motion vector: -

Depth-stencil is

D32_FLOAT_S8X24_UINTformat, stencil bit0x15is used to mark character body meshes,0x35for face,0x95for hair and0xA0is for trees, brushes, and other foliage -

Drawing order:

- Static Mesh

- Ground and emissive objects

- Animated objects and destructible (?)

- Foliage

- Decals

- Mask for fur

- Fur

DS convert passes

The D32S8_TYPELESS Depth-stencil buffer is converted to R32_TYPELESS in full-screen size and then downsampled to half size as R16_FLOAT and R8_UINT in 3 passes, for easier pixel sampling later.

Motion-stencil pass

-

All moving objects and foliage and furs are marked with some different bits in this pass regarding with last frame screen-space positions, and then further extended for few pixels (Exactly the same solution as Temporal Antialiasing in Uncharted 4: Better anti-ghost!)

-

Motion-stencil pass:

Mask and LUT passes

Reflection mask

- Parts of some objects are drawn onto an

R8_UNORMRT and used later in SSR and TAA passes

Compute pass to noise normal

- The RT is used for sun shadow, AO(?), direct sunlight, SSR and indirect skylight passes

- Noise sample:

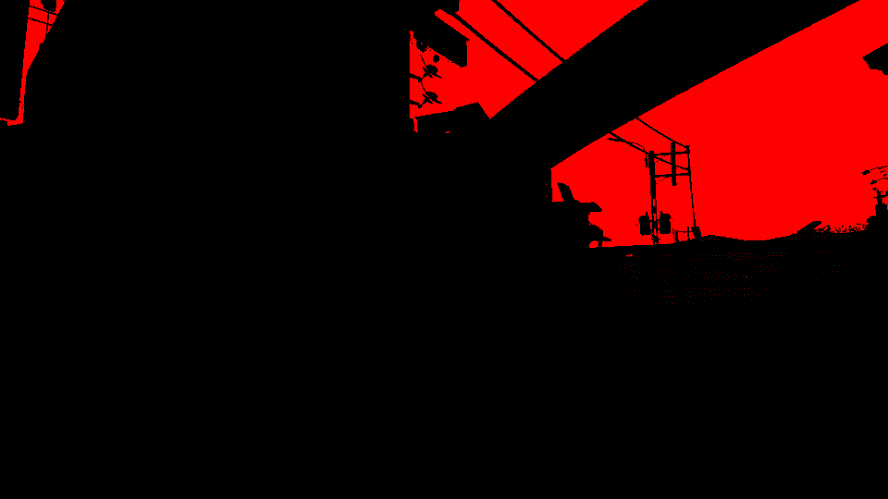

Some passes to mask the sky out

- All of them are in quite low resolution and mipmaped, the mipmap calculation executes multiple times

- And finally, there is a graphic pass to draw a quite accurate sky mask on

R32_FLOAT:

(HB)AO (?)

- All in 960x540, looks like some sort of AO-required normal data:

Color-grading LUT

- Color-grading LUT:

Ocean wave noise

- Around 22~ 32x32x1 compute works are dispatched to generate noise for water, which is used in previous ocean wave pass

Light and shadow passes

CSM passes for direct light

- A 2k

R16_TYPELESStexture is used first to render a few simple meshes (some kinds of mesh proxy?), but the RT is never used - Then 4 classic CSM cascades are rendered and stored as 4 slices in a 4k

R16_TYPELESS2D texture array, no geometry shader RT index is involved, it just executes draw calls 4 times on each cascade

Omni shadow maps for point/area lights

- Every omni shadow map is first rendered as

R32_TYPELESSin 1k, then converted to 10 slicesR16G16UNORM(should be VSM?)

Cloud distribution

Sky cubemaps

- 7 mipmap levels in

R11G11B10_FLOATformat:

And a stereographic projected sky radiance is also generated:

Coat mask (?)

Clustered light index (?)

- Some compute-only passes generate a few 3D textures that contain only indices:

Direct light shadow

- In full screen resolution, it’s coming from sun at the day and moon at the night

Local lights mask (?)

- In a 120x68 60x34 resolution

Unconfirmed compute dispatches to update some StructuredBuffers

Environment Radiance capture

- First, cubemap version is generated

- Then all of them are converted to 2D dual-paraboloid textures in 512x256, each one contains 6 mips, and there are 32 captures stored as slices in one 2D texture array, 6~ texture arrays in the main street current scene

A lot GI-related (?) compute passes

-

64x64x64 texture that is

R32G32_UINTformat, 2 channels contain index-like data -

Slice 19 for example

Landscape maps

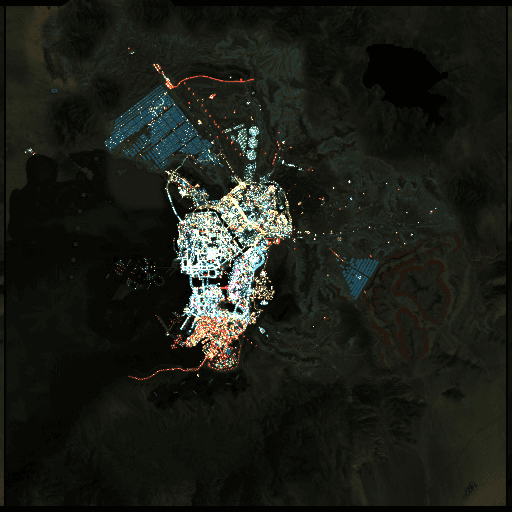

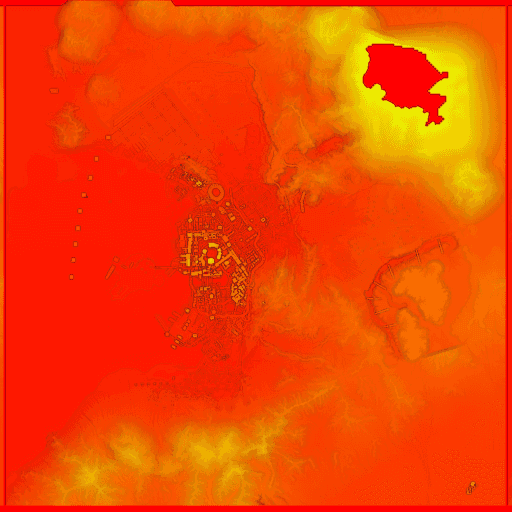

- A few layers drawn here, from normal to a kind of heatmap in 1k resolution

Local volumetric fog

- 2 mips from 240x136 to 120x68, each one has 128 slices and mip 0 is from main camera view, it should be some kind of ray-marching:

Head mask

- The most hilarious one, should be used later to render detailed facial expressions

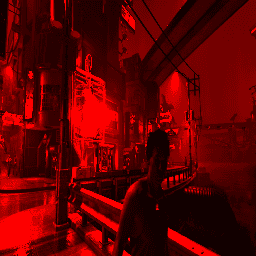

Direct and emissive lights

- Tiled-lighting, each tile is 16x16 by size and all executed as indirect compute command:

Sky

- Only masked sky region is drawn

SSR

- A low resolution depth mask is drawn first:

- Then the full-screen size SSR is drawn in

R11B11G10_FLOATwith anotherR8_UNORMRT for reflectivity. The previously noised world-space normal and motion stencil RT is used as inputs, also the last frame’s downscaled TAA output is used for sampling:

Indirect light

- Could see some artifacts (Light leaking because of VCT?):

All lights composition pass

- 2 RTs, specular reflections are stored on RT1:

Transparent LUT

- Compute shader is involved a few times to generate some LUTs for later transparent and holographic objects:

Skin and eyes

- Skin irradiance is rendered in screen space:

- Then eyes are rendered:

AO(?)/GI shadow

- Add additional shadows to pixels that should not be lighted too much:

Volumetric fog

- Just a simple image composition pass:

Water reflection

- Few passes generate the water reflection, including all related masks and noises:

Post-processing passes

TAA

- Next it’s a full-screen no-suprise TAA pass:

Blur

- First, the TAA-ed result is downscaled to 1/2, 1/4, and 1/8 size in 1 compute pass and stored in 3 mips, then each of the mips is blurred horizontally and vertically

Unknown transparent

Cloud

- The distribution noise of the cloud is pre-generated:

Holo and transparent objects

- All futurism holographic objects are drawn next (a significant number of small triangle count meshes), including water surface and some local light scattering:

Post-TAA

- 2 downscaled images are generated again in 1/2 and 1/4 sizes with all transparent objects on them, and finally, it’s converted back to full-screen size and gets sharpened

HDR LUT(?)

- A 256x256

R16_FLOAT, a 256x64R16G16B16A16_FLOATand a 256x1R16G16B16A16_FLOATimages are generated by 3 compute dispatches, and finally are used as part of the inputs for the next compute pass to generate a structured buffer, which is used widely when rendering sky cubemaps and reflection probes

Bloom

- The full-screen images are half-sized 6 times and get bloomed

Camera lens effects

- Rendered on a half-screen size RT

Color grading

- The color-graded image is then converted to LDR

Gamma correction

- The Gamma correction is executed in a weird resolution 456x256

GUI elements

- All GUI elements are drawn on a full HD RT and the corresponding mipmaps are generated, and then a few compute passes add bloom to them

Film Grain

- The noise LUT is generated on-the-fly

Swap chain image

- Finally, the result of the all above is presented to the screen

Undrawnable conclusions

Well, what else I could say, 2 decades have passed already in the 21st century, Cyberpunk 2077 basically is the summing of the modern game rendering techniques which progressed during the years. Even without the enabling of ray-tracing, the overall appearance of the graphics are magnificent, it just bursts my euphoria when I’m pacing around Night City. Despite all the controversies over the release and gameplay glitches which mainly caused by not robust enough physics system and overestimated streaming implementation, the game’s rendering is quite well-crafted, but still, I expected to see more state-of-the-art architectural practices such as a heavier GPU-driven rendering pipeline (which’s been more and more popular since 2016-2017). How’s your opinion about it? And what could change if we come back 5 years later to have a review? Maybe we’d laugh at the “outdated” techniques in Cyberpunk 2077, but I’m sure we’ll still keep admitting that real-time rendering is always full of exciting directions to explore!