How expensive it is to create your own AAA-level game audio solution?

Don’t be greedy, unless it’s really cheap

I’ve been in the game industry for almost 5 years since I broke up with traditional music post-production, and the official direction which I spend every 8*5 (of course, sometimes more) hours a week and get paid is not surprisingly, game audio. It doesn’t matter how you’re familiar with this domain, more or less you’d hear about some names like FMOD or Wwise recent years, even you’re just coming from a typical gamer’s point of view. Nowadays anybody who struggles to pick up a sound acceleration card would be called a perfectionist, a rational mind would just spend some hundreds or thousands whatever-your-local-currency-it-is to get some decent speakers or headphones, and that’s ALL for gaming.

The game audio tech is quite saturated, the hardware capability for game audio is quite saturated. It’s not so tempting for the entire industry to figure out something really rejuvenate, the VBAP algorithm is good enough, the traditional reverb is good enough, the shoe box spatialization model is good enough, the geometry-based sound propagation model is good enough, nobody would spend some expensive runtime resources to resolve a 1% more accurate sound scenario. But I’d make a clear clarification, I’m not pessimistic about this, it’s again an economic problem, the nature of the game is the art and entertainment on real-time simulation software, and all we needed is just something good enough to please ourselves. The techs in current game audio are really suitable for almost all the current games, whether you’re playing GTA V in 2013 or 2020, your CPU would hardly complain about the audio tasks from it, but you’d keep trying to augment the graphics with mods and purchasing new graphics cards because the holy grail of real-time rendering is still there, it’s not good enough yet!

So almost all the progresses you’d see among the vendors and studios recent years about game audio are typically related to working pipelines, toolchains and design, sometimes a voxel-based or wave simulation solution would pop up, but maybe they are not eye-catching enough or not robust enough or whatever reasons, the audio community didn’t react actively about them. So I accumulated a question from all those what I saw and experienced so far, what is the core unsolved problem in the game audio?

In some years of SIGGRAPH there is a course called Open Problems in Real-Time Rendering which covers the unsolved problems in real-time rendering topic. I’d think often that, how many open problems in the game audio we still have now? The sound acoustic propagation would be one, anyway we didn’t find an acceptable enough general one-for-all answer yet. Also, maybe the sound replay mechanism or so-called 3D sound would be another one? A one-dimensional PCM wave sample file transferred into a stereo channel headphones, think about it how many compromises we’d given compare with how sound wave travel into our ears in real life! Despite those physical-related problems, is it really comfortable and easy for audio artists to design and implement something for a game nowadays? Every audio software and middlewares would bring you their philosophy of working and force you to adopt into their models, and their features growing constantly every release. Too much trivial knowledge we’d have to learn!

What’s the matter?

There is a famous quote ”If Your Only Tool Is a Hammer Then Every Problem Looks Like a Nail”, I’d plagiarism and modify it a little to “If you have a problem and a Swiss Army knife then you’ll never find a way to solve it” for some of my experiences with some software. I’m not criticizing how many unused abilities they provided for us to solve problems, but about how messy those abilities are delivered to the user. I always need just 10%-20% functionalities of them for my problems, another friend of mine needs another 10%, and I have some friends occasionally use them too, so finally the software dev released a 150% feature complex version. Yes, I’m not only targeting Photoshop or Word or Unreal Engine or Wwise, but I’m also talking about all these frustrate experiences when every time I’d spend equal time to search documentations and solve my problems. But thanks all gods we have Unix philosophy, at least some genius invention half-century ago which finally save me from totally being a slave of click-and-drag. I’d list one version of them again because I like these principles so much, it’s even not limited to software developments:

- Make it easy to write, test, and run programs.

- Interactive use instead of batch processing.

- Economy and elegance of design due to size constraints (“salvation through suffering”).

- Self-supporting system: all Unix software is maintained under Unix.

Gosh, look at what we are doing every day now! I’d always remember the feeling when I installed Chocolatey on Windows 10 at my home computer and started to just choco install cmake, if you never used yum or apt-get (or brew on macOS) you’d never understand how free it feels like when you have a package manager for all your software on the computer. The problem here is “why I always have to download software installer by myself?” and the solution is “find/build a package manager”.

So now let’s take a look at our poor game audio working pipelines. Audio designer exports some .wav assets from some colorful but messy DAWs, and import them into the editor of the middlewares, and then export them again as the middleware’s asset format, and then import them again into the editor of the game, and then again maybe need to package them as a tight pack for game’s release. Even you’d automate this procedure, still, you’re wasting time and complexing the situation. But wait, what we actually need to do in order to produce audio for a game?:

This is an over-simplified version, but what else? The designer produces assets and programmer produces codes, naturally, it has been working like this from the 90s in the game industry. But the borderline between the assets and codes keeps changing during the years, the designer wants something programmable or configurable but in a higher level and in a visual-friendly way, and the programmer doesn’t want so many low-level details, and then the programmable-asset framework became a trend:

Thanks to this trend, game audio middleware solutions like FMOD and Wwise become more and more popular. But they are not simple enough if you remember what I’ve complained about above, you have to compromise some of your freedom in working flow to gain the benefit of another kind of freedom, that you finally could get rid of the low-level details and leave them to the middleware to deal with. But is it really so scary to get our hands dirty with so-called “low-level details” nowadays?

During the research (search actually at the most of the time) about this problem, I found a nice presentation The Next-Gen Dynamic Sound System of Killzone Shadow Fall in GDC 2014 by speakers from Guerrilla Games which answered my question and convinced me about another type of possibilities, that it’s not a behemoth level of task to fully get control back of the audio in your game. Killzone: Shadow Fall is a successful 1st-party FPS game released on PS4 7 years ago, if a 200+ employee (worldwide top-level) studio could afford to develop their own game audio solutions for a 3A game in practice, it simply means that it’s not always an impossible task for other kinds of devs to do the same, even you’ve considered the time and energy cost, it’s maybe not cheaper than a license fee of middleware, but it’s also not more expensive than keeping thinking that the “low-level details” is untouchable. So I decided to recreate their solution by myself. 2 hands + 1 brain and IDE, let’s take a review about how far I have been going until now.

The architecture

The ideal situation about this solution is, there would be a minimal working flow barriers between audio assets, codes for audio, and the game itself. So if we try to follow the architecture what they demonstrated, then we need at least such few modules:

I would try to add as more dependencies on the open-source projects as I could to implement each module, because I want to keep the code iteration speed fast to see the actual results (a little bit sarcastic actually), I’ll leave those businesses which are not directly related to my problems to them. And the final winner for every module are:

-

Graph Editor: imgui and imgui-node-editor. I’ve had some nice experiences in the past with Dear ImGui, it is quite stable and easy-to-use, the API is super intuitive and it’s lightweight enough if compared with some other GUI libs like Qt. And imgui-node-editor is a project built over imgui (thanks thedmd for hard work on it!) which provide facilities to build node editor easier than from scratch.

-

Graph Compiler: I decided to generate C++ source code from the node graph by myself, and then build it with CMake and the usually used build toolchains like MSVC.

-

I/O: I’ve implemented a small WAV loader and parser module before, so I’d keep extending it as the audio asset I/O. For all the other metadata like node graph and node descriptions, it’s obviously we need something human-readable and easy to serialize. So far nlohmann’s json is the most handsome JSON lib for C++ I’ve used, and why don’t we choose it?

-

Audio Player: The same approach to choose a graphics API, because I don’t want to stick with a platform or OS so tightly, and I prefer something like bgfx in rendering to wrap all the platform differences but still leave me the actual platform-irrelevant low-level details, after a brief gambling research, I found miniaudio (I like single header C lib, because you could just blame it when something not working:)), it’s exactly what I imagined about, an opaque wrapper layer over the OS and audio backends.

-

Plug-in Hook: I’d like to build some WYSIWYG that the user could hear the result immediately as soon as they change the node graph. It’s the first time I got touch with dynamic library hot-reload topics, my lack of deep knowledge about linking didn’t bring so many barriers, since I found cr, it would automatically manage all the underlying vtable relocation and scope related things for you, and the API is really concise like the document said, only 3 lines of codes.

I didn’t try to make the decision before writing the actual code, all the architecture related topics were progressed during the development, and there were several times of significant refactoring, so if you would like to choose different approaches it’s totally possible.

The implementation

It’s always easy to draw a beautiful architecture picture than successfully build the project, there are still lots of trivial problematic nuances in the details when I implemented this project, but luckily it looks the code quality is convergence nowadays, so I think maybe I didn’t overestimate the challenge at the beginning.

API style

Functional programming is all about calculation, feeding inputs to function and getting outputs, staying far from the global scope and reducing all state changes, and finally, you could go to the pub rather than keep working on bugs on Friday night. But sadly it’s not always easy to do so, it’s not very performance-critic about an audio lib here, so I would like to maintain some state machines, and all the API would return the execution state result like a typical Windows HRESULT:

namespace Waveless

{

enum class WsResult

{

NotImplemented,

Success,

Fail,

NotCompatible,

IDNotFound,

FileNotFound

};

}And any modifications over the input of function should be applied on pointers:

using namespace Waveless;

WsResult foo(float* bar, float foobar)

{

if(bar == nullptr)

{

return WsResult::Fail;

}

if(*bar > 512.0f)

{

*bar += foobar;

}

else

{

*bar -= foobar;

}

return WsResult::Success;

}The lowest low-level details

All software runs on hardware, we need logger to print log, we need a timer to check the time, we need memory manager to play with heap memory, we need some not so dumb and naive math functions… Actually I could choose some open-source facilities libs again, but since I’ve implemented some of them from another project then I’d directly reuse them here. After all, they are not something quite complex at the beginning if you are happy with STL. Let’s take a look at an example:

namespace Waveless

{

enum class TimeUnit { Microsecond, Millisecond, Second, Minute, Hour, Day, Month, Year };

struct Timestamp

{

uint32_t Year;

uint32_t Month;

uint32_t Day;

uint32_t Hour;

uint32_t Minute;

uint32_t Second;

uint32_t Millisecond;

uint32_t Microsecond;

};

class Timer

{

public:

static WsResult Initialize();

static WsResult Terminate();

static const uint64_t GetCurrentTimeFromEpoch(TimeUnit time_unit);

static const Timestamp GetCurrentTime(uint32_t time_zone_adjustment);

};

}The Object

I’m against the irresponsible OOP mindset, so I won’t choose to simply encapsulate behaviors and data together. But still, it’s necessary to have some very common properties among all the “object”s, and then:

namespace Waveless

{

enum class ObjectState

{

Created,

Activated,

Terminated

};

struct Object

{

uint64_t UUID;

ObjectState objectState = ObjectState::Created;

};

}Depends on how maniac you are about ECS, you could inherit from Object structure or just insert it as a field to other types (or rename it to ObjectMetadataComponent or TheMotherOfAllComponent?). I’m okay with harmless inheritance.

WAV instance

There are too many reversions of WAV standard during the years, if you want to parse them by yourself I’d like to say it’s not a so wisdom choice. Anyway, I’ve done such meaningless thing and my WAV loader class supports almost all types of WAV now, from the standard 44 bytes header WAV to BWF and RF64 (Recommendations for sound designer and musicians: don’t use strange DAW). And the WAV instance simply contains the header and the pointer to the sample array on heap. About the parsing details you could take a look at the actual source file.

struct WavHeader

{

RIFFChunk RIFFChunk;

JunkChunk JunkChunk;

fmtChunk fmtChunk;

factChunk factChunk;

bextChunk bextChunk;

dataChunk dataChunk;

int ChunkValidities[6] = { 0 };

};

struct WavObject

{

WavHeader header;

char* samples;

int count;

};Audio Player

My previous experience with DSP audio programming is all quite “academic”. When you’re just expecting numpy to give you a correct small array everything looks like from fairy tales, while miniaudio is a C library, and now I’m going to deal with real audio samples from music and sound effects. The audio rendering is quite similar to the graphics rendering, you have a rendering frame for a certain amount of time between each two final rendering result presentations, and you then could process the audio buffer during this period by your desired DSP algorithms. But for graphics rendering all of these happens on GPU and audio rendering typically executed on CPU (we won’t touch the hardware audio chips), so it’s actually easier to manage the synchronization since we only have to take care of one device now.

The audio buffer is just a bunch of raw memory chunks, the underlying data type is from 16-bits integer to 64-bits double float, depends on how precise you’d like to maintain when you’re performing DSP. The size of the buffer shouldn’t be too large because we have a more strict time restriction now, we must finish all the processes on the current buffer before the last frame’s buffer finishes playing on the playback devices, otherwise, we’ll hear discontinuities. A common song lasts around 4-5 minutes, if we pour all the samples of it into the audio buffer then of course, we need to wait quite a long time to hear it if the DSP algorithms are expensive. A better choice is every frame we just consume a little bit data from the entire samples, and discard it after playing:

The functionality of buffer processing in miniaudio is provided through a callback mechanism, we need to initialize the audio device and bind our callback function, and then just start the device and it will call our callback every rendering frame. Finally, we’ll perform our DSP algorithms and write to the output audio buffer in our callback function. I wrapped miniaudio into an audio engine class:

// ignore other details

void data_callback(ma_device* pDevice, void* pOutput, const void* pInput, ma_uint32 frameCount);

ma_device_config deviceConfig;

ma_device device;

ma_decoder_config deviceDecoderConfig;

ma_event terminateEvent;

WsResult AudioEngine::Initialize()

{

deviceDecoderConfig = ma_decoder_config_init(ma_format_f32, 2, MA_SAMPLE_RATE_44100);

deviceConfig = ma_device_config_init(ma_device_type_playback);

deviceConfig.playback.format = deviceDecoderConfig.format;

deviceConfig.playback.channels = deviceDecoderConfig.channels;

deviceConfig.sampleRate = deviceDecoderConfig.sampleRate;

deviceConfig.dataCallback = data_callback;

deviceConfig.pUserData = nullptr;

if (ma_device_init(NULL, &deviceConfig, &device) != MA_SUCCESS)

{

Logger::Log(LogLevel::Error, "Failed to open playback device.");

Terminate();

return WsResult::Fail;

}

ma_event_init(device.pContext, &terminateEvent);

if (ma_device_start(&device) != MA_SUCCESS)

{

Logger::Log(LogLevel::Error, "Failed to start playback device.");

Terminate();

return WsResult::Fail;

}

return WsResult::Success;

}As you could see here in the callback function there is a parameter called frameCount, the frame here means sample frame, and one sample frame contains the number of channels of samples if the audio file is stored interleaved:

Since I’m writing this project for game audio, typically a sound effect would have multiple instances playing at the same time, so it’s necessary to distinguish between the asset data and the instance data. We would not mangle with the asset data but would have as many instances as we want, so now we could add 2 more new types:

struct PlayableObject : public Object

{

ma_decoder_config decoderConfig;

};

struct EventPrototype : public PlayableObject

{

WavObject* wavObject;

};

struct EventInstance : public PlayableObject

{

ma_decoder decoder;

ma_event stopEvent;

};The commonly used name for sound effect instances in the game audio community is voice, I didn’t choose it because for me it sounds a little bit vague. I chose to describe the asset and instance data as “event” instead since I’d like to indicate more about “what happened” than “a sound is playing” (also I’m still a user of FMOD and Wwise, but my version of “event” is slightly different from theirs).

And the overall audio engine would look like this:

And finally let’s take a look at the core of the audio engine - data callback functions:

void data_callback(ma_device* pDevice, void* pOutput, const void* pInput, ma_uint32 frameCount)

{

for (auto i : g_eventInstances)

{

if (i.second->objectState == ObjectState::Activated)

{

auto l_frame = read_and_mix_pcm_frames(i.second, reinterpret_cast<float*>(pOutput), frameCount);

if (l_frame < frameCount)

{

i.second->objectState = ObjectState::Terminated;

ma_event_signal(&i.second->stopEvent);

}

}

}

(void)pInput;

}It will iterate over all currently activated event instances and mix them onto the output buffer. If the returned frame count of the event instance is smaller than the current global frame count, then it simply means that we’ve reached the end of the instance’s total sample, and then we signal a stop event for further more cleanup operations.

I copy and modify the submixing function from the example of miniaudio, to fit in my current design:

ma_uint32 read_and_mix_pcm_frames(EventInstance* eventInstance, float* pOutput, ma_uint32 frameCount)

{

float temp[sizeOfTempBuffer];

ma_uint32 tempCapInFrames = ma_countof(temp) / eventInstance->decoderConfig.channels;

ma_uint32 totalFramesRead = 0;

while (totalFramesRead < frameCount)

{

ma_uint32 iSample;

ma_uint32 framesReadThisIteration;

ma_uint32 totalFramesRemaining = frameCount - totalFramesRead;

ma_uint32 framesToReadThisIteration = tempCapInFrames;

if (framesToReadThisIteration > totalFramesRemaining)

{

framesToReadThisIteration = totalFramesRemaining;

}

// Decode and apply filters

framesReadThisIteration = ma_decoder_read_pcm_frames(&eventInstance->decoder, temp, framesToReadThisIteration);

if (framesReadThisIteration == 0)

{

break;

}

if (eventInstance->cutOffFreqLPF != 0.0f)

{

low_pass_filter(deviceDecoderConfig.channels, eventInstance->cutOffFreqLPF, deviceDecoderConfig.sampleRate, eventInstance->sampleStateLPF, temp, framesToReadThisIteration);

}

if (eventInstance->cutOffFreqHPF != 0.0f)

{

high_pass_filter(deviceDecoderConfig.channels, eventInstance->cutOffFreqHPF, deviceDecoderConfig.sampleRate, eventInstance->sampleStateHPF, temp, framesToReadThisIteration);

}

/* Mix the frames together. */

for (iSample = 0; iSample < framesReadThisIteration * deviceDecoderConfig.channels; ++iSample)

{

pOutput[totalFramesRead * deviceDecoderConfig.channels + iSample] += temp[iSample];

}

totalFramesRead += framesReadThisIteration;

if (framesReadThisIteration < framesToReadThisIteration)

{

break; /* Reached EOF. */

}

}

return totalFramesRead;

}It looks a little bit scary, but the actual algorithm is simple, we use a temporary stack buffer to decode the sample of the event instance, and add it onto the output buffer. Since we don’t know how many samples frames the ma_decoder_read_pcm_frames would return, so we keep decoding until we reach the maximum frame count in global. And I add my version of LPF and HPF here to evaluate the possibility of mixing chain, actually it works! But I’d like to revisit this part later for a more flexible mixing architecture, it’s not enough to just have some filters for game audio.

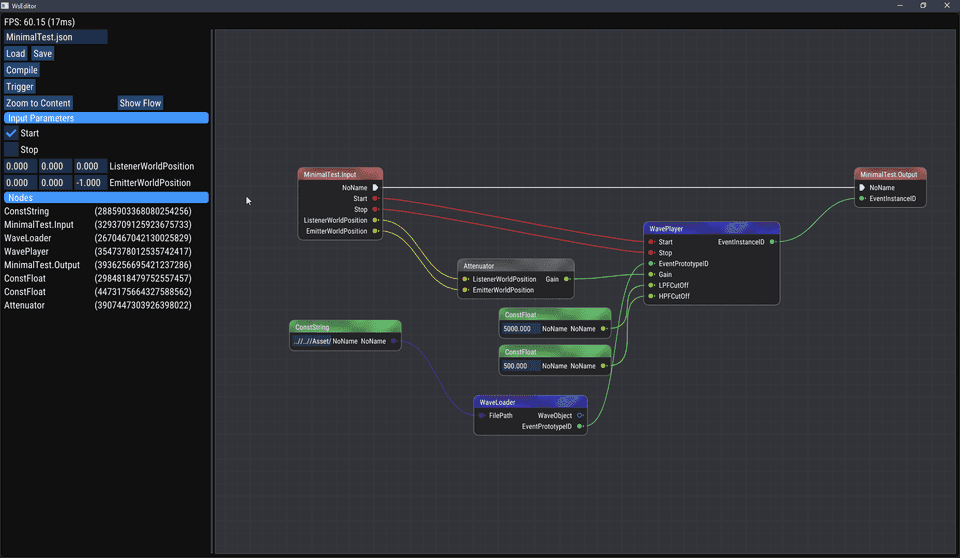

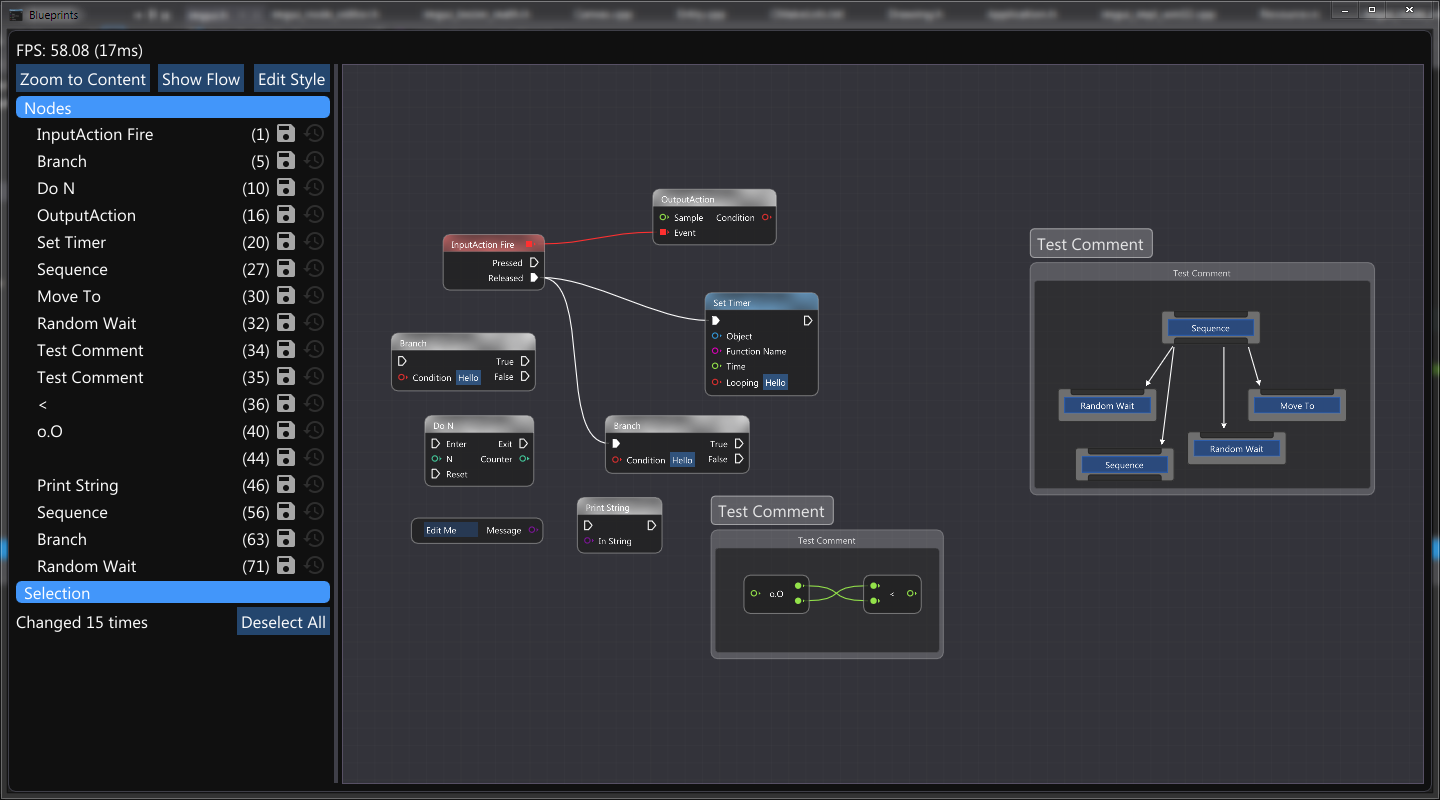

Graph Editor

If you take a look at the excellent example of a blueprint editor from imgui-node-editor, you’d agree it’s quite elegant as Unreal Engine’s version. And that example is my start point, but there are too many refactoring needed for a truly extensible framework like what Killzone: Shadow Fall’s team did. So I designed a more redundant but decoupled architecture for the graph editor:

Basically it’s a variation of classic MVVM or MVP design patterns, the node, pin and link are all the core objects. When loading a canvas file, each model and pin instance would be created from the manager class (“factory”) by the descriptor instance (“prototype”), and then the corresponding widget instance would be created later by the widget rendering modules. I won’t show too many details about how to implement them since they are not the major problem here, you could take a look at the source files if you want to know something more. But let’s still catch a glimpse about how the actual object classes would be declared:

namespace Waveless

{

enum class PinType

{

Flow,

Bool,

Int,

Float,

String,

Vector,

Object,

Function,

Delegate,

};

enum class PinKind

{

Output,

Input

};

enum class NodeType

{

ConstVar,

FlowFunc,

AuxFunc,

Comment

};

using PinValue = uint64_t;

struct PinDescriptor : public Object

{

const char* Name;

PinType Type;

PinKind Kind;

PinValue DefaultValue;

int ParamIndex;

};

struct ParamMetadata

{

const char* Name;

const char* Type;

PinKind Kind;

};

struct FunctionMetadata

{

const char* Name;

const char* Defi;

int ParamsCount = 0;

int ParamsIndexOffset = 0;

};

struct NodeDescriptor : public Object

{

const char* RelativePath;

const char* Name;

NodeType Type = NodeType::ConstVar;

int InputPinCount = 0;

int OutputPinCount = 0;

int InputPinIndexOffset = 0;

int OutputPinIndexOffset = 0;

FunctionMetadata* FuncMetadata;

int Size[2] = { 0 };

int Color[4] = { 0 };

};

}The descriptor’s metadata file for the wave file loader node:

{

"NodeType": 2,

"Parameters": [

{

"Name": "FilePath",

"PinType": "String",

"PinKind": 1,

"ParamIndex": 0

},

{

"Name": "WaveObject",

"PinType": "Object",

"PinKind": 0,

"ParamIndex": 1

},

{

"Name": "EventPrototypeID",

"PinType": "Int",

"PinKind": 0,

"DefaultValue": 0,

"ParamIndex": 2

}

],

"Color": {

"R": 64,

"G": 64,

"B": 255,

"A": 255

}

}And the function definition:

void Execute(std::string& in_FilePath, WavObject& out_WaveObject, uint64_t& out_eventPrototypeID)

{

out_WaveObject = WaveParser::LoadFile(in_FilePath.c_str());

out_eventPrototypeID = AudioEngine::AddEventPrototype(out_WaveObject);

}I limited the possible data type of pin connection since it’s just a graph editor, the user shouldn’t be bothered by how a 32-bits unsigned integer works differently from a 16-bits signed version. The reference from node descriptor to pin descriptors are a typical indirection design, we could use the global offset and count in the descriptor instance array to access them.

The model classes:

namespace Waveless

{

struct NodeModel;

struct PinModel : public Object

{

PinDescriptor* Desc;

NodeModel* Owner = 0;

const char* InstanceName = 0;

PinValue Value;

};

enum class NodeConnectionState { Isolate, Connected };

struct NodeModel : public Object

{

NodeDescriptor* Desc;

NodeConnectionState ConnectionState = NodeConnectionState::Isolate;

int InputPinCount = 0;

int OutputPinCount = 0;

int InputPinIndexOffset = 0;

int OutputPinIndexOffset = 0;

float InitialPosition[2];

};

enum class LinkType { Flow, Param };

struct LinkModel : public Object

{

LinkType LinkType = LinkType::Flow;

PinModel* StartPin = 0;

PinModel* EndPin = 0;

};

}A uint64_t would be convenient to store any constant primitive value of the pin directly in the pin model, or a pointer to the actual value’s address. As you could see there are some duplicated fields, I would prefer to eliminate them but it’s still acceptable for editor-related assets.

Graph Compiler

For the sake of simplicity, I assume the user wouldn’t make any loops between nodes in the canvas, so the graph finally becomes a DAG structure. In order to generate the correct C++ source file, we need to sort the nodes by the execution flow, it would be simple if we mark links with different connection types. My sort algorithm is quite naive, I just brutally iterates over all the node models and store them based on the execution flow index, it didn’t hurt the performance so much until now, but I assume there would be a lot of more elegant and faster approaches:

struct NodeOrderInfo

{

NodeModel* Model;

uint64_t Index;

};

//......

// part of void GetNodeOrderInfos()

auto l_startNode = m_StartNode;

auto l_endNode = m_EndNode;

auto l_currentNode = l_startNode;

// Iterite over the execution flow

while (l_currentNode)

{

// Parameter dependencies

AddAuxFunctionNodes(l_currentNode, *l_links);

NodeOrderInfo l_nodeOrderInfo;

l_nodeOrderInfo.Model = l_currentNode;

l_nodeOrderInfo.Index = m_CurrentIndex;

m_NodeOrderInfos.emplace_back(l_nodeOrderInfo);

// Next node

auto l_linkIt = std::find_if(l_links->begin(), l_links->end(), [l_currentNode](LinkModel* val) {

return (val->StartPin->Owner == l_currentNode) && (val->LinkType == LinkType::Flow);

});

if (l_linkIt != l_links->end())

{

auto l_link = *l_linkIt;

l_currentNode = l_link->EndPin->Owner;

m_CurrentIndex++;

}

else

{

l_currentNode = 0;

}

}

//......After getting all the node order information then it’s time to transform them into the actual C++ source file. It’s again a quite ugly and handcrafted stringstream, I’ll show some examples here:

void WriteExecutionFlows(std::vector<char>& TU)

{

WriteStartNode(TU);

for (auto node : m_NodeOrderInfos)

{

if (node.Model != m_StartNode)

{

if (node.Model->Desc->Type == NodeType::ConstVar)

{

WriteConstant(node.Model, TU);

}

else

{

WriteLocalVar(node.Model, TU);

WriteFunctionInvocation(node.Model, TU);

}

}

}

}

WsResult GenerateSourceFile(const char* inputFileName, const char* outputFileName)

{

GetNodeOrderInfos();

GetNodeDescriptors();

std::vector<char> l_TU;

WriteIncludes(l_TU);

WriteFunctionDefinitions(l_TU);

WriteScriptSignature(inputFileName, l_TU);

std::string l_scriptBodyBegin = "\n{\n";

std::copy(l_scriptBodyBegin.begin(), l_scriptBodyBegin.end(), std::back_inserter(l_TU));

WriteExecutionFlows(l_TU);

std::string l_scriptBodyEnd = "}";

std::copy(l_scriptBodyEnd.begin(), l_scriptBodyEnd.end(), std::back_inserter(l_TU));

auto l_outputPath = "..//..//Asset//Canvas//" + std::string(inputFileName) + ".cpp";

if (IOService::saveFile(l_outputPath.c_str(), l_TU, IOService::IOMode::Text) != WsResult::Success)

{

return WsResult::Fail;

}

return WsResult::Success;

}And finally, invoke the shell command to build CMake project and build the plugin:

WsResult BuildPlugin()

{

auto l_cd = IOService::getWorkingDirectory();

std::string l_command = "mkdir \"" + l_cd + "../../Build-Canvas\"";

std::system(l_command.c_str());

#ifdef _DEBUG

l_command = "cmake -DCMAKE_BUILD_TYPE=Debug -G \"Visual Studio 15 Win64\" -S ../../Asset/Canvas -B ../../Build-Canvas";

#else

l_command = "cmake -DCMAKE_BUILD_TYPE=Release -G \"Visual Studio 15 Win64\" -S ../../Asset/Canvas -B ../../Build-Canvas";

#endif // _DEBUG

std::system(l_command.c_str());

l_command = "\"\"%VS2017INSTALLDIR%/MSBuild/15.0/Bin/msbuild.exe\" ../../Build-Canvas/Waveless-Canvas.sln\"";

std::system(l_command.c_str());

#ifdef _DEBUG

l_command = "xcopy /y ..\\..\\Build-Canvas\\Bin\\Debug\\* ..\\..\\Build\\Bin\\Debug\\";

#else

l_command = "xcopy /y ..\\..\\Build-Canvas\\Bin\\Release\\* ..\\..\\Build\\Bin\\Release\\";

#endif // _DEBUG

std::system(l_command.c_str());

return WsResult::Success;

}One of the test canvas:

And the generated header and source files:

#define WS_CANVAS_EXPORTS

#include "../../Source/Core/WsCanvasAPIExport.h"

#include "../../Source/Core/Math.h"

#include "../../Source/IO/WaveParser.h"

#include "../../Source/Runtime/AudioEngine.h"

using namespace Waveless;

#pragma pack(push, 1)

struct MinimalTest_InputData

{

bool in_Start;

bool in_Stop;

Vector in_ListenerWorldPosition;

Vector in_EmitterWorldPosition;

};

#pragma pack(pop)

WS_CANVAS_API void EventScript_MinimalTest(MinimalTest_InputData inputData);#include "MinimalTest.h"

void Execute_WaveLoader(std::string& in_FilePath, WavObject& out_WaveObject, uint64_t& out_eventPrototypeID)

{

out_WaveObject = WaveParser::LoadFile(in_FilePath.c_str());

out_eventPrototypeID = AudioEngine::AddEventPrototype(out_WaveObject);

}

void Execute_MinimalTest_Output(uint64_t in_eventInstanceID)

{

}

void Execute_MinimalTest_Input(bool in_Start, bool in_Stop, Vector in_ListenerWorldPosition, Vector in_EmitterWorldPosition)

{

}

void Execute_WavePlayer(bool in_Start, bool in_Stop, uint64_t in_eventPrototypeID, float in_Gain, float in_LPFCutOff, float in_HPFCutOff, uint64_t& out_eventInstanceID)

{

if (in_Start)

{

out_eventInstanceID = AudioEngine::Trigger(in_eventPrototypeID);

}

if (in_Gain != 0.0f)

{

AudioEngine::ApplyGain(out_eventInstanceID, in_Gain);

}

if (in_LPFCutOff != 20000.0f)

{

AudioEngine::ApplyLPF(out_eventInstanceID, in_LPFCutOff);

}

if (in_HPFCutOff != 0.0f)

{

AudioEngine::ApplyHPF(out_eventInstanceID, in_HPFCutOff);

}

}

void Execute_Attenuator(Vector& in_ListenerWorldPosition, Vector& in_EmitterWorldPosition, float& out_gain)

{

auto l_distanceFromListener = (in_ListenerWorldPosition - in_EmitterWorldPosition).Length();

if (l_distanceFromListener)

{

out_gain = Math::Linear2dBAmp(1.0f / (l_distanceFromListener * l_distanceFromListener));

}

else

{

out_gain = 0.0f;

}

}

WS_CANVAS_API void EventScript_MinimalTest(MinimalTest_InputData inputData)

{

Execute_MinimalTest_Input(inputData.in_Start, inputData.in_Stop, inputData.in_ListenerWorldPosition, inputData.in_EmitterWorldPosition);

std::string NoName_3764011140541903891 = "..//..//Asset//testC.wav";

WavObject WaveObject_3285133974272340789;

uint64_t EventPrototypeID_2726935047208135274;

Execute_WaveLoader(NoName_3764011140541903891, WaveObject_3285133974272340789, EventPrototypeID_2726935047208135274);

float Gain_3479971340518051646;

Execute_Attenuator(inputData.in_ListenerWorldPosition, inputData.in_EmitterWorldPosition, Gain_3479971340518051646);

float NoName_3109558878669456172 = 5000.000000;

float NoName_2953546187121584618 = 500.000000;

uint64_t EventInstanceID_3403570235478685868;

Execute_WavePlayer(inputData.in_Start, inputData.in_Stop, EventPrototypeID_2726935047208135274, Gain_3479971340518051646, NoName_3109558878669456172, NoName_2953546187121584618, EventInstanceID_3403570235478685868);

Execute_MinimalTest_Output(EventInstanceID_3403570235478685868);

}I didn’t believe that the entire implementation of the compiler would be on this level of easiness, just use some basic language features in C++ and I really could get some compilable code. But indeed it is nothing more than some simple string parsers and sorting algorithms, what I’d say, a dirty codebase is not so bad!

Plug-in Hook

It won’t be quite complex to use cr, I wrote a plugin manager class as the host module to manage multiple plugin instances, while the plugin hook entrance would be compiled into the dynamic library when the code was generated. The entrance source file varies between different graphs, so I use a template file to generate them:

#include "../../Source/Core/Logger.h"

#include "../../GitSubmodules/cr/cr.h"

#include "PluginName.h"

static unsigned int CR_STATE version = 1;

CR_EXPORT int cr_main(struct cr_plugin *ctx, enum cr_op operation)

{

if (operation == CR_UNLOAD)

{

Logger::Log(LogLevel::Verbose, "Plugin unloaded version ", ctx->version);

return 0;

}

if (operation == CR_LOAD)

{

Logger::Log(LogLevel::Verbose, "Plugin loaded version ", ctx->version);

return 0;

}

if (ctx->version < version)

{

Logger::Log(LogLevel::Verbose, "A rollback happened due to failure: ", ctx->failure);

}

version = ctx->version;

auto l_inputData = reinterpret_cast<PluginName_InputData*>(ctx->userdata);

if (l_inputData != nullptr)

{

EventScript_PluginName(*l_inputData);

}

return 0;

}This plugin’s main function would be invoked every time when the host calling cr_plugin_update(), and if the input data is valid then we’d trigger the actual function:

As you could see above on the video, when I changed the file name from testA.wav to testC.wav and built the graph, the actual dynamic lib automatically reloaded and the global state was preserved correctly (the version of the plugin in this case), and the execution flow change to the updated version.

Robust is the king

The overall development time of this project is around 50-100 coding hours, which I achieved by 5 months, and I assume the maximum coding hour would be around 200-300 hours if consider a further extension and refactoring for several improvements. Yes, I didn’t measure the sLOC but time, because it’s my opinion that the software architecture rules the codebase size and complexity in a rational and controllable project, the only error introduced during the development cycle is always human error.

But you may criticize like “are you sure this demo-level of stuff could work in the real game development?” The answer is, I don’t know, because all that I’ve done is on the direction of R&D, there is no game actually used what I made. But let’s analysis a practical situation:

- The audio designer needs a new node to perform a new DSP effect or some logics;

- The audio programmer writes the node description and the node function source files;

- The audio programmer submits just the files directly to the version control system;

- The audio designer reopens the editor, adds the new node, builds the dynamic library, and in the game it’s automatically hot-reloaded.

Which step would be unpredictable? For step 1, the innovative idea would always be harmless. Then the implementation in step 2, if the node function didn’t rely on any global or external states, it’s easy to make them pure (generally speaking we should prefer to use the pure function in the node graph to make everything procedural!). A VCS submit error should not be counted here in step 3. And the last step, apparently we don’t want to see something like Build: 0 succeeded, 1 failed appearing in the console after hitting the compile button.

So, is the node compiler robust? I’d say never. A C++ compiler should only compile a text file which written within the grammar set of C++ standards, and a node graph compiler should only compile a node graph which written by the grammar of node graph standards. But we don’t have any self-constraint node graph standards here! Even the C++ standards are evolving during the years. I think we should be unified with our software development principle in such situations, let’s only focus on the problem (new design) and give the solution (new node), and only if the solution brings new problems (cannot compile) and then deal with it (extend the compiler).

Another practical problem comes from asset management. A real game shouldn’t load small individual files frequently from disk, it’s a meaningless waste. The improvement is clear and has been given by other audio middlewares: Pack audio files into soundbank file. So as soon as the game loaded, you may load an entire soundbank file and use handles to index the actual samples. It’s very easy to implement such design, all you need is the map between the label, the UUID, the global offset, and the sample size. But we would prefer to load individual files for fast iteration in a development environment, middlewares like FMOD Studio and Wwise would not have such flexibility because their runtime modules only support their packed soundbank files. Each time you want to replace a single sample in the bank, you’d have to reload the entire bank if it’s loaded by the game.

The final complaint from the audio engineer should be about the mixing. Here what I built is a solution for game audio logics, while mixing is all about how to control the audio signal chain’s states. Some local scope DSP nodes like a side-chain compressor or EQ could be added, but also an overall mixing editor could be injected into the audio engine module if it’s necessary. I prefer a group or scenario-based game audio mixing approach, while others prefer a more traditional bus-based solution, all of them would require a further extension to the audio engine itself.

If rationally consider, all these concerns are addressable. The more risky problems you’d occur is your team member’s adoption speed to the new solutions. But I think it’s way more concise and easier to learn such a node graph solution if you have some MaxDSP, PureData or Unreal Blueprint experiences, there are no new concepts that differ from what you’ve known from the traditional audio production, and the working flow alternation is minimal. Now you still open your favorite DAW for sample design at the beginning, then the game and the node graph editor are opening too, what you’d keep doing from that point is experimenting with your design idea, keeping push the “compile” button and auditing the result in the game. The working flow barrier disappears naturally!

So what’s the conclusion? My idea is, if your game dev team has at least one guy who gets charge in the game audio related programming, then it’s still totally possible to build your own audio solutions like this. Even you’re targeting the console platform, the only difference is that you now would implement the low-level audio engine by the console’s audio backend API instead, and link against their libs for the timer, logger, memory management and so on. You don’t have to spend time on some highly sophisticated DSP algorithms, the improvement for the final game audio quality more comes from the working pipeline improvement, the same 300 hours you spend on the codebase, you could bring your audio designer a flexible and user-friendly toolchain that they could produce millions of amazing design, rather than just a 10% more realistic acoustic sound propagation algorithm. After all, the game is more about art than tech, don’t you think so?